Looking at the visual settings for PC games, you’ll encounter a word salad that contains such tasty nuggets as MSAA, FXAA, SMAA and WWJD. OK, maybe not that last one. If you are the lucky owner of a new Nvidia GeForce RTX card, you can now also choose to enable something called DLSS. It’s short for Deep Learning Super Sampling and is a big part of the next generation hardware features found in Nvidia RTX cards. At the time of writing, only these cards have the required hardware to run DLSS:

RTX 2060RTX 2060 SuperRTX 2070RTX 2070 SuperRTX 2080RTX 2080 SuperRTX 2080 Ti

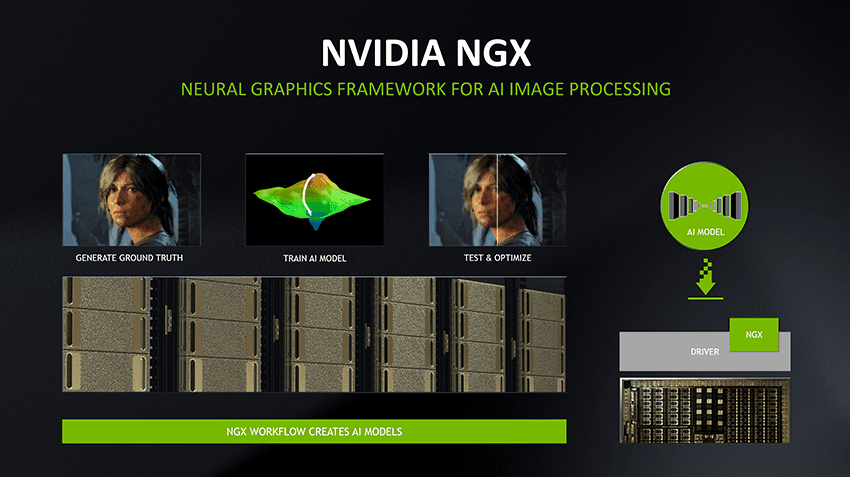

The specific hardware in question is referred to as a “Tensor” core, with each model having a different number of these specialized processors. Tensor cores are designed to accelerate machine learning tasks, which DLSS is an example of. If you don’t use DLSS, that part of the card remains idle. This means you aren’t using the full capacity of your shiny new GPU if DLSS is available, but remains off. There’s more to it than that though.To understand what value DLSS brings to the table, we have to digress briefly into a few related concepts.

A Quick Detour Into Internal Resolutions & Upscaling

Modern TVs and monitors have what’s known as a “native” resolution. This simply means that the screen has a specific number of physical pixels. If the image you are displaying on that screen differs from the exact native resolution, it has to be “scaled” up or down to make it fit. So if you output an HD image to a 4K display, for example, it’s going to look quite blocky and jagged. Just as if you’ve zoomed a digital photo in too far. In practice however, HD video looks just fine on a 4K TV, if perhaps a little less sharp than native 4K footage. That’s because the TV has a piece of hardware known as an “upscaler” that processes and filters the lower-resolution image to look acceptable. The problem is that the quality of the upscaling hardware varies wildly between display brands and models. Which is why GPUs often come with their own scaling technology. The “pro” consoles that are designed to output to a 4K display present it with a native 4K image, so that no display upscaling happens at all. This means the developers of games have complete control of the final image quality. However, most console games do not render at a native 4K resolution. They have a lower “internal” resolution, which puts less stress on the GPU. That image is then scaled up to look as good as possible on the high-resolution screen using the console’s internal scaling technology. In effect, DLSS is a sophisticated method that renders a PC game at a lower than native resolution and then uses the DLSS technology to upscale it for the connected display. In theory this leads to a significant boost in performance. While that sounds a lot like what’s happening on 4K consoles, under the hood DLSS is really something special. All thanks to “deep learning”.

What’s The “Deep Learning” Bit About?

Deep learning is a machine learning technique that uses a simulated neural net. In other words, a digital approximation of how the neurons in your brain learn and create solutions to complex problems. It’s the technology that, among other things, allows computers to recognize faces and lets robots understand and navigate the world around them. It’s also responsible for the recent spates of deepfakes. That’s the secret sauce of DLSS. Neural networks require “training” which is basically showing the net examples of what something should be like. If you want to teach the net how to recognize a face, you show it millions of faces, letting it learn the features and patterns that make up a typical face. If it learns the lesson properly, you can show it any image with a face in it, and it will pick it out instantly. What Nvidia have done is to train their deep learning software on incredibly high-resolution images from the games that support DLSS. The neural network learns what the game “should” look like when rendered using supercomputer-level graphics performance. It then takes that lower internal resolution frame and, for lack of a better word, “imagines” what it would have looked like if a much, much more powerful computer than yours had rendered the scene. If that sounds a little like black magic to you well, you’re not alone!

When To Use DLSS

First of all, you can only use DLSS in games that support it, which is a list that’s growing quickly, thankfully. Each title also has its own requirements for DLSS, such as rendering at a minimum resolution, because that’s what the neural net has been trained on. However, the big brain at Nvidia doesn’t stop learning and the DLSS feature on your card will keep getting updates, expanding per-title support and quality. The best way to figure out if you should use DLSS in your games is to eyeball the result. Compare it to traditional upscaling or anti-aliasing to see which is more pleasant. Performance is also an important deciding factor. If you are targeting 60 frames per second, but can’t get there, DLSS is a good choice. If you are getting high frame rates however, DLSS can actually slow things down. That’s because the tensor cores need a fixed amount of time to process each frame. Right now they can’t do it quickly enough for high frame rate play. Essentially, DLSS is most useful when using a high-resolution display (e.g. 4K, ultrawide or 1440p resolutions) with a target frame rate at around 60 frames per second. It’s also incredibly useful when activating the other main party trick of RTX cards – ray tracing. DLSS can offset the performance loss of ray tracing quite well, with an end result that is at times spectacular. That’s the least you need to know before deciding to go with DLSS or not. Just remember that this technology is changing rapidly, so if you don’t like the results today, come back in a few months and you just might just be blown away at last.